HPC Cloud Services: Pay-as-You-Go Supercomputing Guide

In the traditional engineering landscape, your ability to innovate was strictly limited by the hardware sitting in your server room. If you had a 32-core workstation, your mesh size and physics complexity were capped by those 32 cores. If you needed to run a massive transient simulation to meet a client deadline, but the cluster was fully booked, you simply waited.

This hardware bottleneck is the single biggest friction point in R&D today. But the paradigm has shifted.

We are witnessing the democratization of supercomputing. With the maturation of HPC for CFD, the barriers to entry for high-fidelity engineering have crumbled. Whether you are a lean startup, a university research group, or a division of a Fortune 500 company, you no longer need a million-dollar capital budget to access infinite compute power.

In this guide, drawing from over 15 years of experience architecting on-demand supercomputing clusters, I will walk you through the business and technical mechanics of cloud HPC. We will move beyond the hype and look at the real-world ROI, validating how this model is reducing simulation time to market for engineers globally.

Why Are Engineering Startups Moving Away from On-Premise Clusters?

For decades, the “Best Practice” for any serious engineering firm was to build an on-premise cluster. You bought racks of servers, hired an IT specialist, upgraded the cooling in your server room, and paid a hefty electricity bill.

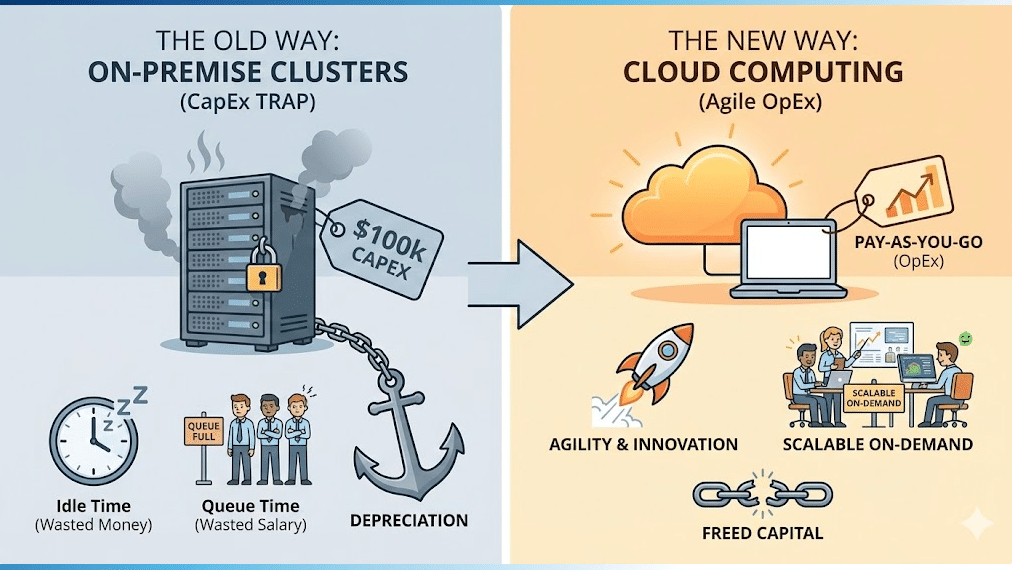

Today, however, I advise most startups against this Total Cost of Ownership (TCO) analysis disaster. The shift toward cloud computing for engineering is driven by a simple financial reality: CapEx (Capital Expenditure) kills agility.

When you buy a $100,000 cluster, you are locking away capital that could be used for hiring talent or product development. Worse, that hardware begins depreciating the moment you turn it on. In three years, it is obsolete. Furthermore, on-premise clusters have a “utilization paradox”:

- Idle Time: When you aren’t running simulations (e.g., weekends, meshing phases), that expensive hardware sits idle, wasting money.

- Queue Time: When a deadline hits and everyone needs to simulate, the cluster is full, and engineers sit idle, wasting salary.

By moving to the cloud, startups convert a massive fixed cost into a variable OpEx (Operational Expenditure). You don’t pay for the server room, the cooling, or the idle time. You simply pay for the answers you generate.

What Exactly Are HPC Cloud Services and How Do They Work?

At its core, hpc cloud services are the “Uber” of supercomputing. Instead of buying a car (cluster) that sits in your garage 90% of the time, you rent a high-performance vehicle only when you need to go somewhere fast.

Technically, this is distinct from standard cloud storage or basic web hosting. We are talking about bare-metal cloud performance or highly optimized Virtual Machines (VMs) specifically architected for scientific computing.

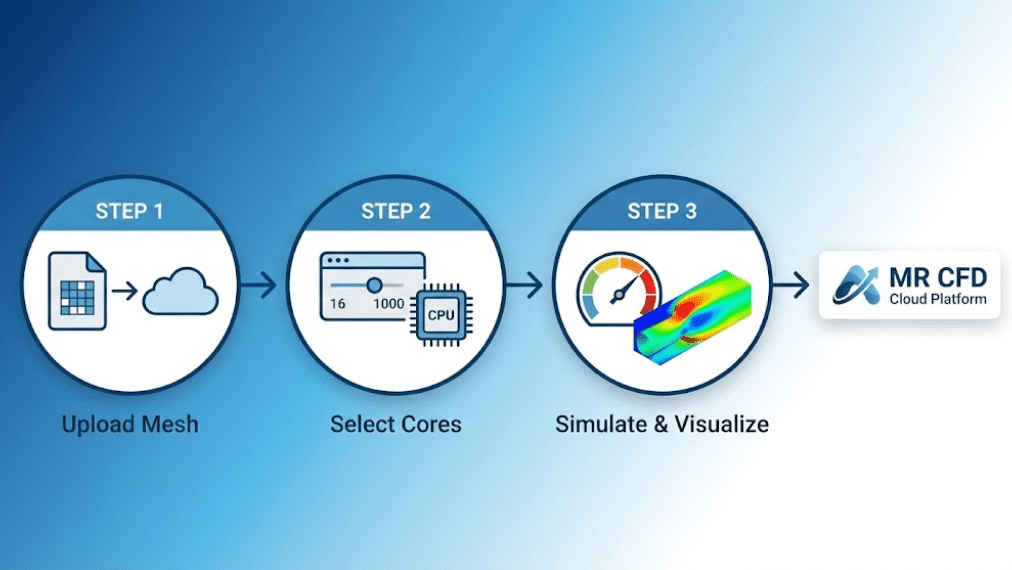

The Workflow typically looks like this:

- Login: You access a secure portal (like the one we provide at MR CFD) via your web browser.

- Configuration: You select your hardware needs. Do you need 1,000 cores for a massive aerodynamic flow? Or a GPU node for a specialized solver?

- Upload: You transfer your

.casor mesh files to the cloud environment. - Solve: You submit the job. The cloud scheduler allocates the resources, runs the simulation using MPI (Message Passing Interface), and frees the resources the second the job finishes.

- Visualize: You either download the results or, more commonly, use remote visualization for CFD to analyze the data directly on the cloud to avoid transferring terabytes of data.

This model gives you access to infrastructure that would be impossible to build internally—such as clusters with hundreds of terabytes of RAM or thousands of the latest AMD EPYC cores—accessible with a few clicks.

How Does the “Pay-as-You-Go” Model Save You Money?

The economics of pay-as-you-go CFD simulation are compelling because they align costs directly with revenue-generating activities.

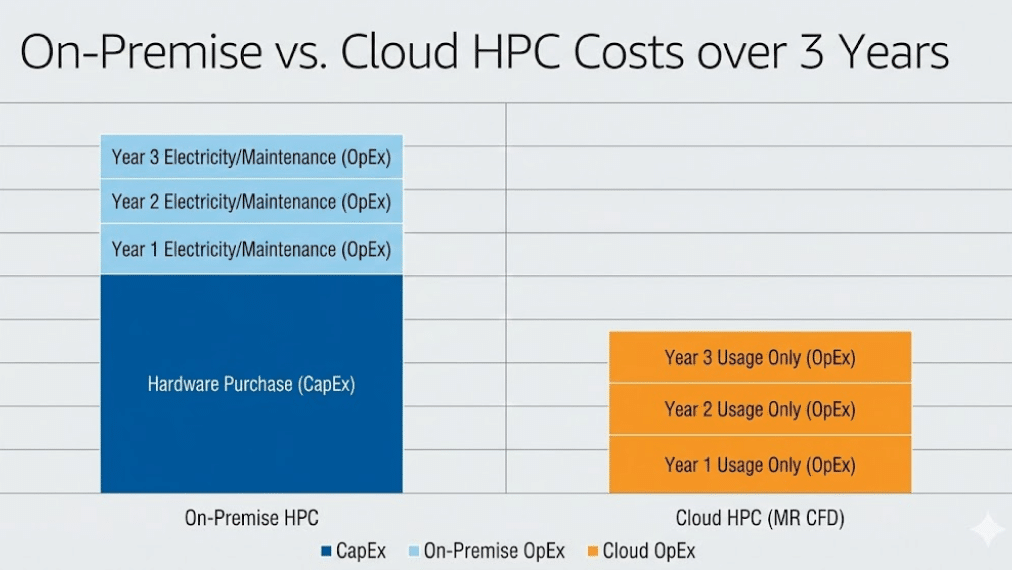

Let’s look at a comparative scenario. An engineering consultancy needs to run heavy simulations, but the workload is “bursty”—intense for two weeks, then quiet for two weeks.

Cost Comparison: On-Premise vs. Cloud HPC

| Cost Factor | On-Premise Cluster (512 Cores) | HPC Cloud Services (512 Cores) |

| Upfront Hardware | $150,000+ (CapEx) | $0 |

| Maintenance/IT | $20,000 / year | $0 (Included in rate) |

| Electricity/Cooling | $12,000 / year | $0 (Included in rate) |

| Obsolescence | High (Replacements every 3-5 years) | Zero (Always access latest hardware) |

| Utilization Risk | You pay even if it sits idle. | You pay only when solving. |

With the OpEx vs CapEx for simulation hardware debate, the winner for variable workloads is clear. If you only need supercomputing power for 500 hours a year, buying a cluster is financial suicide. Cloud services allow you to punch above your weight class, bidding on projects that require massive compute power without owning the infrastructure to support it permanently.

Now, you might ask: Is the performance really there?

Can Cloud HPC Handle Massive Ansys Fluent Simulations?

A common misconception is that the cloud is “slow” due to virtualization overhead. Five years ago, this was partially true. Today, it is a myth we actively debunk at MR CFD.

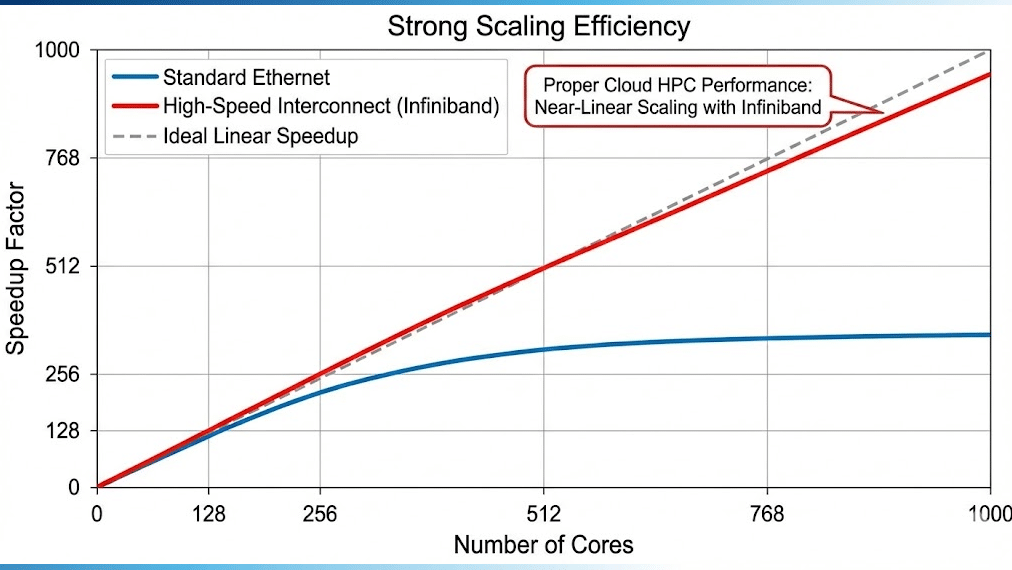

The secret sauce is the interconnect. In CFD, when you split a simulation across multiple nodes (e.g., 500+ cores), the speed is limited by how fast the nodes talk to each other. Standard ethernet is too slow, causing latency bottlenecks.

Top-tier HPC cloud services utilize Infiniband interconnect latency reduction technologies (like NVIDIA Mellanox HDR or AWS EFA). These provide 100Gbps to 400Gbps bandwidth with microsecond latency.

Benchmarks show:

- Scalable MPI clusters on the cloud often achieve 95-100% scaling efficiency up to thousands of cores.

- For Ansys Fluent performance optimization, running on cloud-native hardware often beats older on-premise clusters simply because the cloud providers upgrade their CPUs (e.g., to the latest Intel Sapphire Rapids or AMD Genoa) faster than any private company can afford to.

What is the Difference Between “Cloud Bursting” and Full Cloud Migration?

You don’t have to choose all or nothing. As an architect, I often design hybrid workflows.

- Cloud Bursting: You keep your small local cluster for steady-state, daily runs. But when a deadline hits, or you need to run a massive Design of Experiments (DoE), you “burst” the excess workload to the cloud. This handles peak loads without capital investment.

- Full Cloud Migration: You retire your local hardware entirely. Every simulation, from small test cases to final validation runs, happens on cloud HPC storage solutions.

For startups, Full Migration is usually best. For established enterprises with existing hardware, cloud bursting for peak loads is the most cost-effective engineering simulation strategy.

How Do MR CFD’s Managed HPC Services Simplify the Process?

While AWS vs Azure for HPC is a valid comparison, the raw cloud is intimidating. Setting up a cluster on AWS requires knowledge of Linux CLI, VPC networking, storage mounting, and license server configuration. It is easy to make a mistake that results in a security hole or a surprise $5,000 bill.

MR CFD managed HPC acts as the bridge.

We provide a turnkey experience. We have pre-configured the environment with industry-standard benchmarks and optimizations specifically for Ansys Fluent, OpenFOAM, and CFX.

- No IT Setup: You log in, and the software is ready.

- Scheduler Optimization: We use optimized Slurm or PBS scripts that ensure your job runs on the most cost-effective hardware for your specific physics.

- Support: If a job crashes or a license hangs, you talk to a CFD engineer, not a generic cloud support bot.

We essentially act as your remote IT department, allowing you to focus on the fluid dynamics, not the Linux terminal.

Is Your Data Safe When Using Cloud Supercomputing?

This is the number one question I hear from technical directors. “Is my IP safe on someone else’s computer?”

Ironically, data security protocols in top-tier clouds are often stricter than in average engineering offices.

- Encryption: Data is encrypted in transit (uploading) and at rest (on the disk).

- Isolation: Your simulations run in a Virtual Private Cloud (VPC). No other user can see or access your compute nodes.

- Compliance: The underlying infrastructure is SOC2 compliant, adhering to ISO 27001 standards.

Compare this to an on-premise server room that might be unlocked, or a workstation where data is copied to unencrypted USB drives. For our clients at MR CFD, we implement strict access controls to ensure your proprietary geometry and results remain exclusively yours.

How Can Universities and Students Benefit from On-Demand HPC?

Students often face the “Queue of Death.” You submit your thesis simulation to the university cluster, and the scheduler says: Estimated Start Time: 4 Days.

HPC cloud services liberate students and researchers from these constraints.

- Instant Access: Spin up a 64-core cluster for a few hours to finish a project.

- Learning: Experience real-world cluster management. In our Ansys Fluent course, we often encourage students to run a cloud job to understand the difference between workstation solving and distributed parallel solving.

- Thesis Validation: Run larger, more accurate meshes to validate experimental data, elevating the quality of your research publication.

By moving to an on-demand model, universities can stop fighting over limited resources and start producing better research.

Frequently Asked Questions

Do I need a special license to run Ansys Fluent on the cloud?

Generally, yes. Most engineering software operates on a “Bring Your Own License” (BYOL) model. You connect the cloud cluster to your organization’s license server via a secure VPN. However, some providers and CFD simulation consulting partners can offer “on-demand” or “elastic” licensing for specific projects, allowing you to pay for the software hour just like the hardware hour.

How fast is the data transfer speed for uploading large mesh files?

Upload speeds depend on your local internet connection, but cloud providers have massive inbound bandwidth. To optimize this, we recommend compressing files (using .gz or .h5 formats). More importantly, we advocate for remote visualization for CFD. Instead of downloading a 500GB result file, you keep the data on the cloud and stream the visual desktop to your laptop. This makes the “transfer speed” irrelevant for post-processing.

Can I set budget limits to prevent accidental overspending?

Absolutely. This is a standard feature in MR CFD managed HPC environments. We can set hard caps (e.g., “$500 project limit”) or alerts. If a simulation runs rogue or an engineer forgets to turn off a machine, the system can auto-terminate to protect your budget. This predictability is essential for approving OpEx budgets.

What happens if my simulation crashes mid-run on the cloud?

Cloud infrastructure is highly redundant, but software crashes happen. We always recommend configuring your solver (Fluent/OpenFOAM) to write “autosave” or “checkpoint” files frequently. If an instance fails (which is rare), you can restart the run from the last checkpoint on a new node within minutes. This resilience is often better than a local workstation that shuts down during a power outage.

Is cloud HPC suitable for steady-state or only transient simulations?

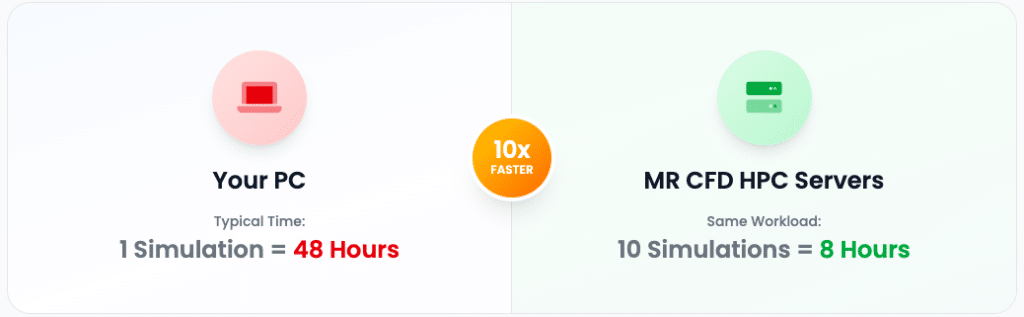

It is suitable for both, but the ROI is different. For small steady-state RANS cases that take 2 hours on a laptop, the cloud might be overkill. However, for large steady-state aerodynamics (100M+ cells) or transient simulation write speed intensive cases, the cloud is superior. The ability to run 10 design variations in parallel (Capacity Computing) rather than one after another is where the true value lies, regardless of the solver physics.

Comments (0)